Darpa Picking Humans for Uploading to Technology

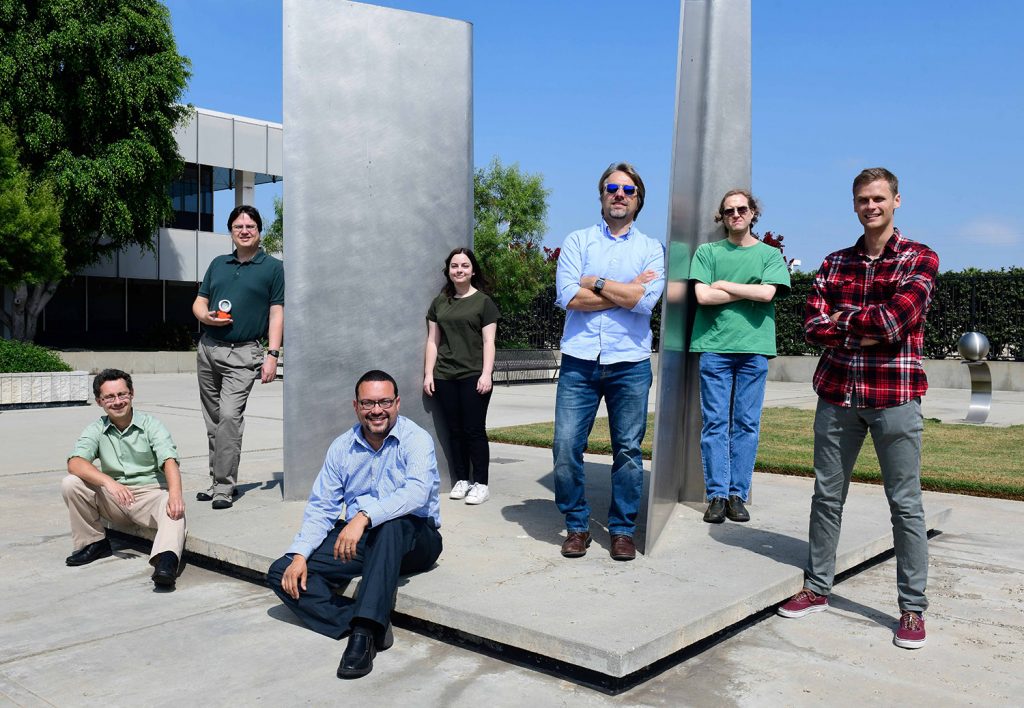

Aerospace Corporation's "Team Platypus" won $100,000 yard prize in an Army competition to apply artificial intelligence and machine learning to electronic warfare.

WASHINGTON: This afternoon, DARPA announced a five-year, $2 billion "AI Next" program to invest in artificial intelligence, with 2019 AI spending lonely jumping 25 percent to $400 1000000. It's all part of a large Pentagon push to compete with Red china.

The vision is for futurity weapons and sensors, robots and satellites, to work together in a global "mosaic," DARPA director Steven Walker told reporters. Rather than rely on slow-moving humans to coordinate the myriad systems, he said, yous're "building enough AI into the machines so that they can actually communicate and network (with each other) at motorcar speed in existent time."

Steven Walker

Ane about-term instance is DARPA's Blackjack plan, Walker said. Blackjack will build a network of pocket-size, affordable surveillance satellites in low earth orbit that tin communicate with each other and coordinate their operations without constant human control. But, like almost DARPA projects, Blackjack is merely a "demonstration" that the applied science can work, not an operational system.

DARPA'southward specialty is long-term gambles, high risk and high reward, and Walker himself acknowledged that the AI engineering of today still faces astringent limits. So how tin can we cut through the hype to see what artificial intelligence and machine learning tin really do for the Usa military in the about future?

I answer, in microcosm, might exist a much more than modest $100,000 prize that the Army'south Rapid Capabilities Part – whose mission is coming together urgent frontline needs – recently awarded to the gloriously named Squad Platypus. The near-term payoff for armed services AI isn't replacing human being soldiers in the physical world, but empowering them to understand the world of radio waves. That's an invisible battlefield which Russia's powerful electronic warfare corps is poised to boss in a future war, unless the US tin catch up.

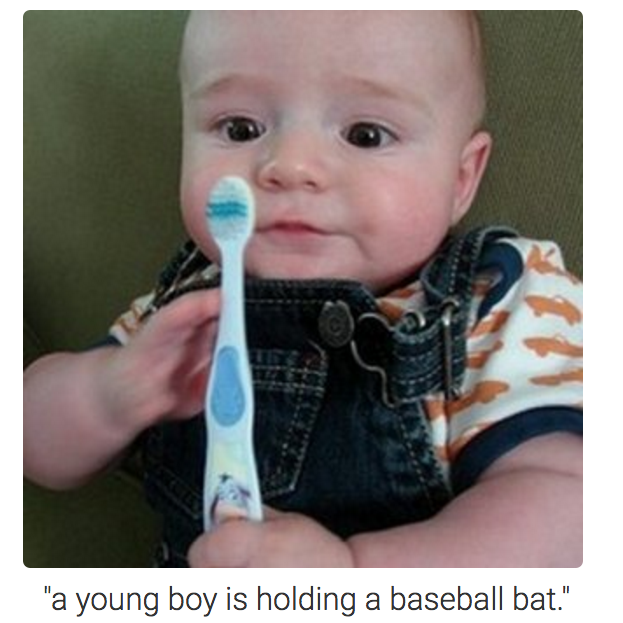

An case of the shortcomings of bogus intelligence when information technology comes to image recognition. (Andrej Karpathy, Li Fei-Fei, Stanford University)

Humans Vs. Computers: Who Wins Where?

The Regular army Rapid Capabilities Office wasn't asking for killer robots. While the service does want to develop unmanned mini-tanks past the mid-2020s, it insists a homo will make all shoot/don't shoot decisions past remote control. Every bit of right at present, robotic supply trucks are still learning how to follow a human driver through rough off-road terrain, and the Army insists on a soldier leading every convoy.

The whole war machine is keenly interested in artificial intelligence to sort through hundreds of terabytes of surveillance imagery and video collected by satellites and drones, sorting terrorists from civilians and legitimate targets from, say, hospitals. That's the purpose of the infamous Project Maven, which Google pulled out of on ethical grounds (while secretly helping Mainland china conscience Google Search). But AI object recognition is some other nascent science. Former DARPA director Arati Prabhakar liked to show reporters an image of a babe playing with a toothbrush that a cutting-edge AI had labeled "a young boy is property a baseball bat."

The whole war machine is keenly interested in artificial intelligence to sort through hundreds of terabytes of surveillance imagery and video collected by satellites and drones, sorting terrorists from civilians and legitimate targets from, say, hospitals. That's the purpose of the infamous Project Maven, which Google pulled out of on ethical grounds (while secretly helping Mainland china conscience Google Search). But AI object recognition is some other nascent science. Former DARPA director Arati Prabhakar liked to show reporters an image of a babe playing with a toothbrush that a cutting-edge AI had labeled "a young boy is property a baseball bat."

But there are some areas where bogus intelligence can already outdo humans. Chess and Go are only the highest-profile cases. These phenomena tend to involve two things: In that location'south a staggering number of potential outcomes, only each outcome is rigidly defined, with no ambivalence. That makes them overwhelming for human being intuition but amenable to the well-baked either-or of binary code.

Andres Vila

Militarily relevant applications tend to involve the invisible dance of electrons: detecting computer viruses and telltale anomalies in networks, for instance, or interpreting the inaudible buzzing of radio-frequency signals. That'due south where the Army went looking for AI help.

"Humans are actually good at some of these problems, similar paradigm recognition," said Andres Vila, the US-educated, Italo-Colombian head of the triumphant Team Platypus. Compared to machines, he told me, "humans nonetheless have the advantage at picking out chairs and cats (from other objects), but how many humans practice you lot need to process a agglomeration of data?"

When it comes to radio communications, however, machines take the advantage even for analyzing a unmarried signal, let lone large amounts of data. Instead of comparing what yous see on a screen to a booklet of known signals, flipping pages until y'all notice one that looks right, the software tin can check the precise figures against millions of potential matches.

"For this kind of coms trouble, machine probably is improve than human being," Vila said in an interview. "We can't sit there and look at the raw signals that output and classify them correctly. There is just no way our own optics could practice it."

Into the Matrix

Radio waves are vital to a modern military, carrying everything from exact orders to targeting data, from search radar to electronic jamming. Simply unlike the signal flags or marching drums of past battlefields, radio is something soldiers can neither meet or hear.

It'd be cool if nosotros could train humans to see radio, chuckled Rob Monto, director of emerging technologies for the Regular army Rapid Capabilities Office: "It'd be like the Matrix." Just until someone develops cyborg eyes, we need machines to see for us – and the smarter the machine, the meliorate.

The Army disbanded its Combat Electronic Warfare Intelligence (CEWI) units, similar the one shown here, later on the Cold War.

Traditionally, human specialists in signals intelligence and electronic warfare stared at screens displaying such data every bit the force, direction, and modulation of radio signals. And so they compared the readings to a catalog of known enemy systems, each of which a unique fashion of transmitting based on its hardware. Back then, irresolute a radio's or radar's emissions required physically rewiring it.

Only modernistic software-defined radio can emit a broad multifariousness of signals from the same piece of equipment, and y'all can change those signals equally easily equally uploading new software. What's more than, because this engineering has become then cheap and compact, the military aren't the only people running around with radios anymore. You lot probably have one in your pocket: It'southward called a cell phone. More radio emissions are coming from wireless networks, digitally controlled car engines, and even baby monitors.

So non only tin the enemy easily alter the signals their systems emit: They can also hide those changing signals amidst a zoo of civilian signals that are themselves constantly changing. If old-school electronic warfare was like finding a needle in a haystack – not impossible if you utilise a magnet – modernistic EW is like finding a particular needle in a needle factory…in the eye of a tornado.

It doesn't affair how many humans you accept staring at screens: They won't be able to keep up. "The amount of brain affair you lot'd need to apply is non just practical," Monto told me. You need something that thinks not only faster than an organic encephalon, simply differently: an artificial intelligence.

Russian Krasukha-2 radar jamming system, reportedly deployed in Syria

The Challenge

The Army created its Rapid Capabilities Office to bypass the usual, laborious procurement system, which tends to accept that soldiers are just issued it long after the commercial sector has made it obsolete. In this case, instead of belongings a traditional contest for a authorities contract, the RCO took copied DARPA and held a "challenge," offering cash prizes to whatever corporate or academic team could best perform a given task.

Seven autonomous cybersecurity systems face up off for the DARPA Cyber Grand Challenge in 2016. DARPA has pioneered using competitions instead of traditional contracts.

Specifically, at the start of the 90-twenty-four hours competition, the Regular army provided each contender a starter database describing different radio signals, which they could use to railroad train their software. Then the Regular army issued two additional datasets that the software would accept to analyze "blind," with no identifying information. The winners would be the software that accurately classified the most signals in the shortest amount of fourth dimension. That'south the kind of problem best suited for car learning: large amounts of precise information for the algorithms to learn on past trial and mistake, with unambiguous standards for success or failure.

More than 150 teams participated to some degree, of which 49 fabricated information technology to the bodily competition. All of them paid their own mode. The Army only had to beat out out

- $xx,000 for the third identify finisher, the private sector team THUNDERING PANDA (allcaps is mandatory) from Motorola;

- $30,00 for second place TeamAU, a group of individual scientists from Commonwealth of australia; and

- $100,000 for the champion, Squad Platypus from the nonprofit, federally funded Aerospace Corporation.

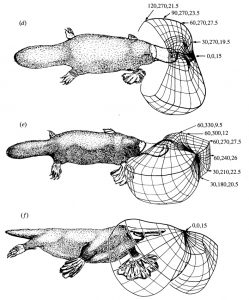

Vila had joined Aerospace a few years ago specifically to piece of work on this problem, realizing that all the machine learning applied science Google and Facebook had adult for image recognition would interpret well to radio signal classification. His team of 8 engineers from multiple countries named itself in honor of the platypus, which tin can sense the electromagnetic fields of its prey.

The platypus uses electromagnetic fields to sense and hunt downward its casualty. (Meera Pate, Reed College, "Platypus Electroreception")

"This Army challenge actually showed up at the perfect time to kickstart this project," Vila told me. But he had never worked with the military machine before, he said. That makes him exactly the kind of innovator the Pentagon is drastic to accomplish.

And so what comes adjacent? The Army is looking at two options, the RCO's Rob Monto told me. One is to invite the high-performing teams from this claiming back for a second round, with a more sophisticated dataset and more challenging classification tasks, so they tin can further refine their software. The other is to get the software every bit-is into the hands of Army electronic warfare soldiers right away, and so they can attempt information technology out in field weather condition and requite feedback. Ultimately the Army needs to exercise both software refinement and soldier testing, preferably in multiple rounds, but which comes side by side is still being studied.

At some point, whoever gets the actual contract will also demand security clearance to look at classified data. The datasets used so far are unclassified, not secret data on potential enemy'southward radios, radars, and jammers. At present, sorting through civilian signals is already useful in itself, Monto said: By telling soldiers what'southward a cellphone, what'southward a coffee shop wifi, then on, it tin permit them focus their human brainpower on the anomalies that might be enemy activity. Simply ultimately the Army wants the software to aid classify military signals as well.

Popular civilisation'southward respond to "can nosotros trust bogus intelligence?" (Courtesy Warner Brothers)

Ambiguity and Trust

Even cutting-edge artificial intelligence has its limits, Team Platypus's Vila told me. Most obviously, it even so can't cope with novelty and ambiguity the mode a human tin can. Machine learning is only every bit good as the data it was trained on. If y'all put AI upwardly against "something admittedly brand new that we have never seen before… and couldn't have imagined, that isn't going to work," he told me.

This squid'due south thought process is less alien to y'all than an artificial intelligence would be.

The second, subtler problem is that is that modern AI is very hard, even impossible, for humans to empathize. Humans may write the initial algorithms, but the code will mutate as the machine learning system works its way by trial and fault through vast datasets. Unlike traditional "deterministic" software, where the algorithms stay the same and a given input always produces the same output, machine learning AI reaches its conclusions along paths that humans did not create and cannot follow.

"Especially since the deep learning revolution happened in 2012, and you take all these algorithms, really circuitous algorithms, (at that place's been) a lot of criticism…most how obscure, how opaque it is, how unreadable the results are," Vila best-selling. "But at the aforementioned fourth dimension, I don't think that has stopped the investment, (considering) the performance is and so, so much ameliorate than any other kickoff of the art technique."

Confidence in artificial intelligence won't come from humans being able to audit the code line by line, Vila predicts, just from humans seeing it work consistently in real-world situations. That said, just as man eyes tin exist tricked past optical illusions, AI has quirks that enemies can exploit. (Come across our series on what we're calling "Artificial Stupidity.") So, Vila told me, "in that location needs to be an agreement across all levels, from the peak commander all the way to the user, of what these algorithms can and cannot do, and fifty-fifty an understanding of how it tin exist fooled."

Figurer scientists and Pentagon leaders use the mythical centaur to describe their ideal of shut collaboration between human and artificial intelligence.

That fundamental question – how can you trust AI? – leads united states back to DARPA's annunciation today. Speaking to a small group of reporters after the agency's 60th ceremony symposium, DARPA director Steven Walker best-selling that the applications of AI correct at present are "pretty narrow," because motorcar learning algorithms crave large datasets and tin can't tell humans how the algorithm came to a specific conclusion.

So a major goal for DARPA's new program, and the focus for the $100 meg in additional 2019 investment, is to develop a new generation of AI that can both function with smaller datasets and tell its users how and why it arrived at an answer. Walker called this the "third wave" of AI, after the first wave of deterministic rules-based systems and today'south second wave of statistical motorcar learning from big data.

DARPA wants to "give the machine the power to reason in a contextual way near its surround," Walker said. Only then, he said, can humans "move on from computer as a tool to computer as a partner."

Source: https://breakingdefense.com/2018/09/darpa-the-army-team-platypus-artificial-intelligence-for-future-war/

0 Response to "Darpa Picking Humans for Uploading to Technology"

Post a Comment